Paulo Henrique de Lima Santana: Bits from FOSDEM 2023 and 2024

Link para vers o em portugu s

Link para vers o em portugu s

Intro

Since 2019, I have traveled to Brussels at the beginning of the year to join FOSDEM, considered the largest and most important Free Software event in Europe. The 2024 edition was the fourth in-person edition in a row that I joined (2021 and 2022 did not happen due to COVID-19) and always with the financial help of Debian, which kindly paid my flight tickets after receiving my request asking for help to travel and approved by the Debian leader.

In 2020 I wrote several posts with a very complete report of the days I spent in Brussels. But in 2023 I didn t write anything, and becayse last year and this year I coordinated a room dedicated to translations of Free Software and Open Source projects, I m going to take the opportunity to write about these two years and how it was my experience.

After my first trip to FOSDEM, I started to think that I could join in a more active way than just a regular attendee, so I had the desire to propose a talk to one of the rooms. But then I thought that instead of proposing a tal, I could organize a room for talks :-) and with the topic translations which is something that I m very interested in, because it s been a few years since I ve been helping to translate the Debian for Portuguese.

Joining FOSDEM 2023

In the second half of 2022 I did some research and saw that there had never been a room dedicated to translations, so when the FOSDEM organization opened the call to receive room proposals (called DevRoom) for the 2023 edition, I sent a proposal to a translation room and it was accepted!

After the room was confirmed, the next step was for me, as room coordinator, to publicize the call for talk proposals. I spent a few weeks hoping to find out if I would receive a good number of proposals or if it would be a failure. But to my happiness, I received eight proposals and I had to select six to schedule the room programming schedule due to time constraints .

FOSDEM 2023 took place from February 4th to 5th and the translation devroom was scheduled on the second day in the afternoon.

The talks held in the room were these below, and in each of them you can watch the recording video.

The talks held in the room were these below, and in each of them you can watch the recording video.

- Welcome to the Translations DevRoom.

- Paulo Henrique de Lima Santana

- Translate All The Things! An Introduction to LibreTranslate.

- Piero Toffanin

- Bringing your project closer to users - translating libre with Weblate. News, features and plans of the project.

- Benjamin Alan Jamie

- 20 years with Gettext. Experiences from the PostgreSQL project.

- Peter Eisentraut

- Building an atractive way in an old infra for new translators.

- Texou

- Managing KDE s translation project. Are we the biggest FLOSS translation project?

- Albert Astals Cid

- Translating documentation with cloud tools and scripts. Using cloud tools and scripts to translate, review and update documents.

- Nilo Coutinho Menezes

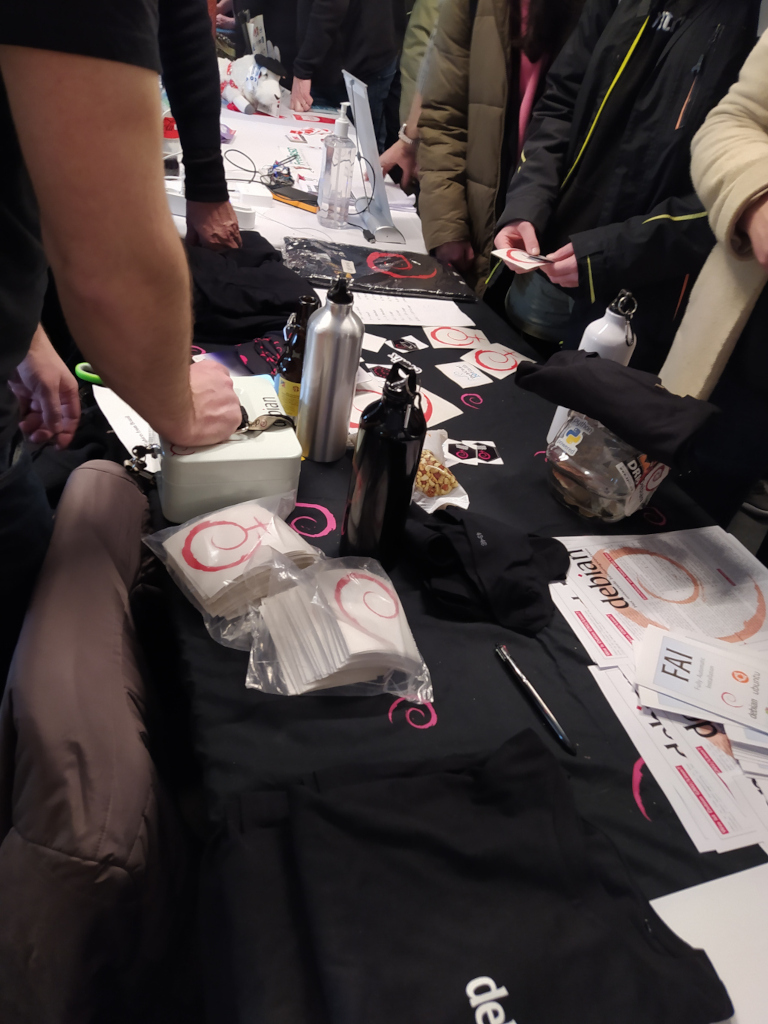

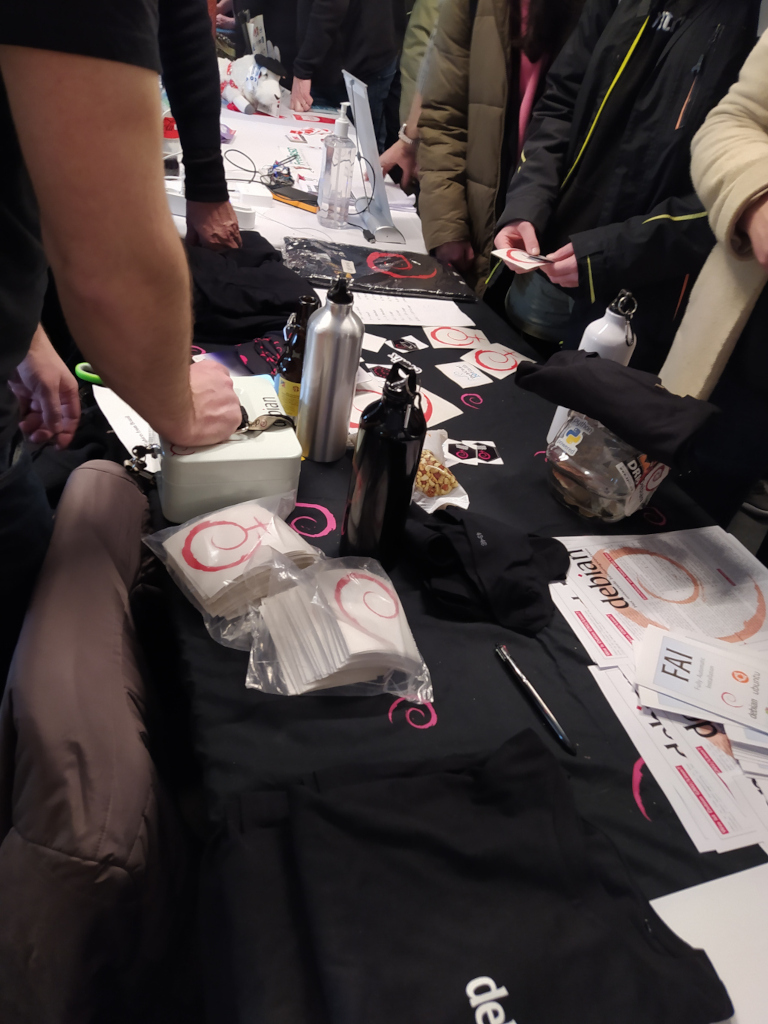

And on the first day of FOSDEM I was at the Debian stand selling the t-shirts that I had taken from Brazil. People from France were also there selling other products and it was cool to interact with people who visited the booth to buy and/or talk about Debian.

Photos

Joining FOSDEM 2024

The 2023 result motivated me to propose the translation devroom again when the FOSDEM 2024 organization opened the call for rooms . I was waiting to find out if the FOSDEM organization would accept a room on this topic for the second year in a row and to my delight, my proposal was accepted again :-)

This time I received 11 proposals! And again due to time constraints, I had to select six to schedule the room schedule grid.

FOSDEM 2024 took place from February 3rd to 4th and the translation devroom was scheduled for the second day again, but this time in the morning.

The talks held in the room were these below, and in each of them you can watch the recording video.

- Welcome to the Translations DevRoom.

- Paulo Henrique de Lima Santana

- Localization of Open Source Tools into Swahili.

- Cecilia Maundu

- A universal data model for localizable messages.

- Eemeli Aro

- Happy translating! It is possible to overcome the language barrier in Open Source!

- Wentao Liu

- Lessons learnt as a translation contributor the past 4 years.

- Tom De Moor

- Long Term Effort to Keep Translations Up-To-Date.

- Andika Triwidada

- Using Open Source AIs for Accessibility and Localization.

- Nevin Daniel

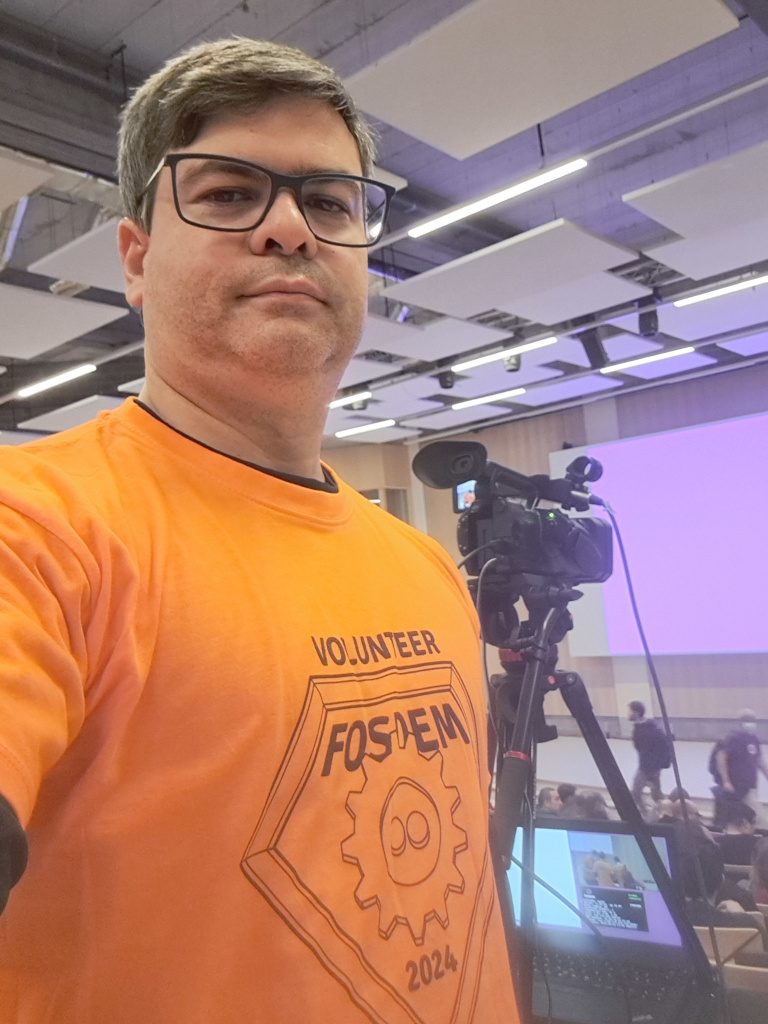

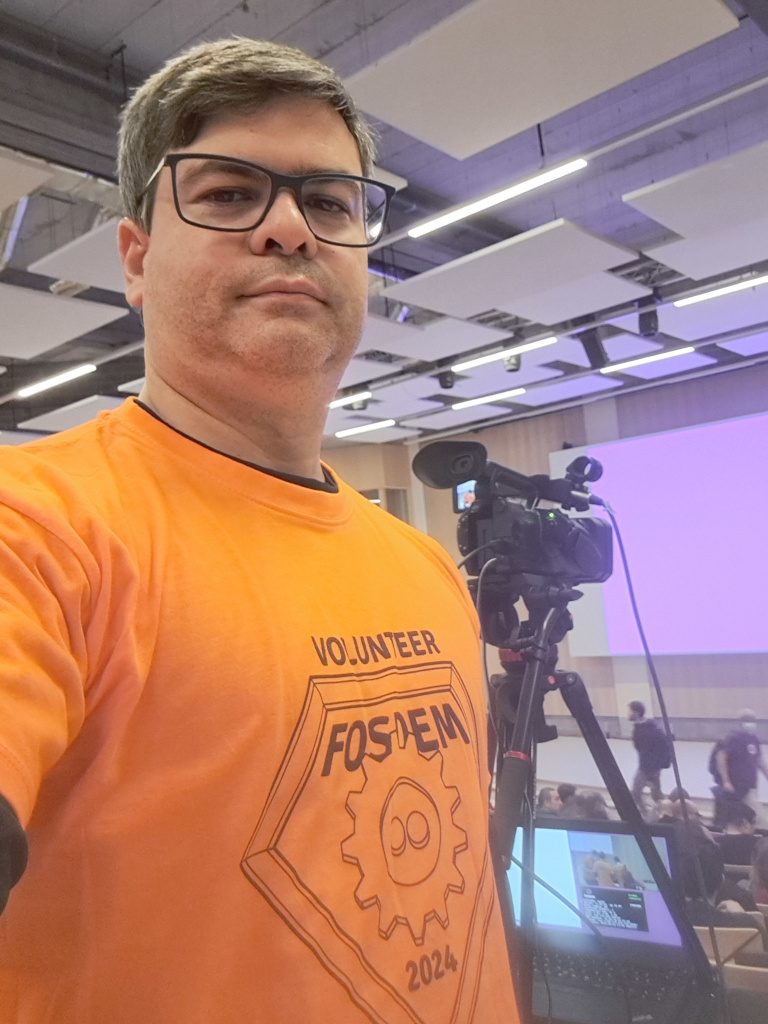

This time I didn t help at the Debian stand because I couldn t bring t-shirts to sell from Brazil. So I just stopped by and talked to some people who were there like some DDs. But I volunteered for a few hours to operate the streaming camera in one of the main rooms.

Photos

Conclusion

The topics of the talks in these two years were quite diverse, and all the lectures were really very good. In the 12 talks we can see how translations happen in some projects such as KDE, PostgreSQL, Debian and Mattermost. We had the presentation of tools such as LibreTranslate, Weblate, scripts, AI, data model. And also reports on the work carried out by communities in Africa, China and Indonesia.

The rooms were full for some talks, a little more empty for others, but I was very satisfied with the final result of these two years.

I leave my special thanks to Jonathan Carter, Debian Leader who approved my flight tickets requests so that I could join FOSDEM 2023 and 2024. This help was essential to make my trip to Brussels because flight tickets are not cheap at all.

I would also like to thank my wife Jandira, who has been my travel partner :-)

As there has been an increase in the number of proposals received, I believe that interest in the translations devroom is growing. So I intend to send the devroom proposal to FOSDEM 2025, and if it is accepted, wait for the future Debian Leader to approve helping me with the flight tickets again. We ll see.

As there has been an increase in the number of proposals received, I believe that interest in the translations devroom is growing. So I intend to send the devroom proposal to FOSDEM 2025, and if it is accepted, wait for the future Debian Leader to approve helping me with the flight tickets again. We ll see.

The talks held in the room were these below, and in each of them you can watch the recording video.

The talks held in the room were these below, and in each of them you can watch the recording video.

- Welcome to the Translations DevRoom.

- Paulo Henrique de Lima Santana

- Translate All The Things! An Introduction to LibreTranslate.

- Piero Toffanin

- Bringing your project closer to users - translating libre with Weblate. News, features and plans of the project.

- Benjamin Alan Jamie

- 20 years with Gettext. Experiences from the PostgreSQL project.

- Peter Eisentraut

- Building an atractive way in an old infra for new translators.

- Texou

- Managing KDE s translation project. Are we the biggest FLOSS translation project?

- Albert Astals Cid

- Translating documentation with cloud tools and scripts. Using cloud tools and scripts to translate, review and update documents.

- Nilo Coutinho Menezes

Photos

Joining FOSDEM 2024

The 2023 result motivated me to propose the translation devroom again when the FOSDEM 2024 organization opened the call for rooms . I was waiting to find out if the FOSDEM organization would accept a room on this topic for the second year in a row and to my delight, my proposal was accepted again :-)

This time I received 11 proposals! And again due to time constraints, I had to select six to schedule the room schedule grid.

FOSDEM 2024 took place from February 3rd to 4th and the translation devroom was scheduled for the second day again, but this time in the morning.

The talks held in the room were these below, and in each of them you can watch the recording video.

- Welcome to the Translations DevRoom.

- Paulo Henrique de Lima Santana

- Localization of Open Source Tools into Swahili.

- Cecilia Maundu

- A universal data model for localizable messages.

- Eemeli Aro

- Happy translating! It is possible to overcome the language barrier in Open Source!

- Wentao Liu

- Lessons learnt as a translation contributor the past 4 years.

- Tom De Moor

- Long Term Effort to Keep Translations Up-To-Date.

- Andika Triwidada

- Using Open Source AIs for Accessibility and Localization.

- Nevin Daniel

This time I didn t help at the Debian stand because I couldn t bring t-shirts to sell from Brazil. So I just stopped by and talked to some people who were there like some DDs. But I volunteered for a few hours to operate the streaming camera in one of the main rooms.

Photos

Conclusion

The topics of the talks in these two years were quite diverse, and all the lectures were really very good. In the 12 talks we can see how translations happen in some projects such as KDE, PostgreSQL, Debian and Mattermost. We had the presentation of tools such as LibreTranslate, Weblate, scripts, AI, data model. And also reports on the work carried out by communities in Africa, China and Indonesia.

The rooms were full for some talks, a little more empty for others, but I was very satisfied with the final result of these two years.

I leave my special thanks to Jonathan Carter, Debian Leader who approved my flight tickets requests so that I could join FOSDEM 2023 and 2024. This help was essential to make my trip to Brussels because flight tickets are not cheap at all.

I would also like to thank my wife Jandira, who has been my travel partner :-)

As there has been an increase in the number of proposals received, I believe that interest in the translations devroom is growing. So I intend to send the devroom proposal to FOSDEM 2025, and if it is accepted, wait for the future Debian Leader to approve helping me with the flight tickets again. We ll see.

As there has been an increase in the number of proposals received, I believe that interest in the translations devroom is growing. So I intend to send the devroom proposal to FOSDEM 2025, and if it is accepted, wait for the future Debian Leader to approve helping me with the flight tickets again. We ll see.

- Paulo Henrique de Lima Santana

- Cecilia Maundu

- Eemeli Aro

- Wentao Liu

- Tom De Moor

- Andika Triwidada

- Nevin Daniel

As there has been an increase in the number of proposals received, I believe that interest in the translations devroom is growing. So I intend to send the devroom proposal to FOSDEM 2025, and if it is accepted, wait for the future Debian Leader to approve helping me with the flight tickets again. We ll see.

As there has been an increase in the number of proposals received, I believe that interest in the translations devroom is growing. So I intend to send the devroom proposal to FOSDEM 2025, and if it is accepted, wait for the future Debian Leader to approve helping me with the flight tickets again. We ll see.

Mee Goreng, a dish made of noodles in Malaysia.

Mee Goreng, a dish made of noodles in Malaysia.

Me at Petronas Towers.

Me at Petronas Towers.

Photo with Malaysians.

Photo with Malaysians.

March. Busy days.

March. Busy days.

Update 28.02.2024 19:45 CET: There is now a blog entry at

Update 28.02.2024 19:45 CET: There is now a blog entry at

Like each month, have a look at the work funded by

Like each month, have a look at the work funded by

I have been working on the rather massive Apparmor bug

I have been working on the rather massive Apparmor bug  In late 2022 I prepared a batch of

In late 2022 I prepared a batch of  I started by looping 5 m of cord, making iirc 2 rounds of a loop about

the right size to go around the book with a bit of ease, then used the

ends as filler cords for a handle, wrapped them around the loop and

worked square knots all over them to make a handle.

Then I cut the rest of the cord into 40 pieces, each 4 m long, because I

had no idea how much I was going to need (spoiler: I successfully got it

wrong :D )

I joined the cords to the handle with lark head knots, 20 per side, and

then I started knotting without a plan or anything, alternating between

hitches and square knots, sometimes close together and sometimes leaving

some free cord between them.

And apparently I also completely forgot to take in-progress pictures.

I kept working on this for a few months, knotting a row or two now and

then, until the bag was long enough for the book, then I closed the

bottom by taking one cord from the front and the corresponding on the

back, knotting them together (I don t remember how) and finally I made a

rigid triangle of tight square knots with all of the cords,

progressively leaving out a cord from each side, and cutting it in a

fringe.

I then measured the remaining cords, and saw that the shortest ones were

about a meter long, but the longest ones were up to 3 meters, I could

have cut them much shorter at the beginning (and maybe added a couple

more cords). The leftovers will be used, in some way.

And then I postponed taking pictures of the finished object for a few

months.

I started by looping 5 m of cord, making iirc 2 rounds of a loop about

the right size to go around the book with a bit of ease, then used the

ends as filler cords for a handle, wrapped them around the loop and

worked square knots all over them to make a handle.

Then I cut the rest of the cord into 40 pieces, each 4 m long, because I

had no idea how much I was going to need (spoiler: I successfully got it

wrong :D )

I joined the cords to the handle with lark head knots, 20 per side, and

then I started knotting without a plan or anything, alternating between

hitches and square knots, sometimes close together and sometimes leaving

some free cord between them.

And apparently I also completely forgot to take in-progress pictures.

I kept working on this for a few months, knotting a row or two now and

then, until the bag was long enough for the book, then I closed the

bottom by taking one cord from the front and the corresponding on the

back, knotting them together (I don t remember how) and finally I made a

rigid triangle of tight square knots with all of the cords,

progressively leaving out a cord from each side, and cutting it in a

fringe.

I then measured the remaining cords, and saw that the shortest ones were

about a meter long, but the longest ones were up to 3 meters, I could

have cut them much shorter at the beginning (and maybe added a couple

more cords). The leftovers will be used, in some way.

And then I postponed taking pictures of the finished object for a few

months.

Now the result is functional, but I have to admit it is somewhat ugly:

not as much for the lack of a pattern (that I think came out quite fine)

but because of how irregular the knots are; I m not confident that the

next time I will be happy with their regularity, either, but I hope I

will improve, and that s one important thing.

And the other important thing is: I enjoyed making this, even if I kept

interrupting the work, and I think that there may be some other macrame

in my future.

Now the result is functional, but I have to admit it is somewhat ugly:

not as much for the lack of a pattern (that I think came out quite fine)

but because of how irregular the knots are; I m not confident that the

next time I will be happy with their regularity, either, but I hope I

will improve, and that s one important thing.

And the other important thing is: I enjoyed making this, even if I kept

interrupting the work, and I think that there may be some other macrame

in my future.

After seven years of service as member and secretary on the GHC Steering Committee, I have resigned from that role. So this is a good time to look back and retrace the formation of the GHC proposal process and committee.

In my memory, I helped define and shape the proposal process, optimizing it for effectiveness and throughput, but memory can be misleading, and judging from the paper trail in my email archives, this was indeed mostly Ben Gamari s and Richard Eisenberg s achievement: Already in Summer of 2016, Ben Gamari set up the

After seven years of service as member and secretary on the GHC Steering Committee, I have resigned from that role. So this is a good time to look back and retrace the formation of the GHC proposal process and committee.

In my memory, I helped define and shape the proposal process, optimizing it for effectiveness and throughput, but memory can be misleading, and judging from the paper trail in my email archives, this was indeed mostly Ben Gamari s and Richard Eisenberg s achievement: Already in Summer of 2016, Ben Gamari set up the  I think while developing Wayland-as-an-ecosystem we are now entrenched into narrow concepts of how a desktop should work. While discussing Wayland protocol additions, a lot of concepts clash, people from different desktops with different design philosophies debate the merits of those over and over again never reaching any conclusion (just as you will never get an answer out of humans whether sushi or pizza is the clearly superior food, or whether CSD or SSD is better). Some people want to use Wayland as a vehicle to force applications to submit to their desktop s design philosophies, others prefer the smallest and leanest protocol possible, other developers want the most elegant behavior possible. To be clear, I think those are all very valid approaches.

But this also creates problems: By switching to Wayland compositors, we are already forcing a lot of porting work onto toolkit developers and application developers. This is annoying, but just work that has to be done. It becomes frustrating though if Wayland provides toolkits with absolutely no way to reach their goal in any reasonable way. For Nate s Photoshop analogy: Of course Linux does not break Photoshop, it is Adobe s responsibility to port it. But what if Linux was missing a crucial syscall that Photoshop needed for proper functionality and Adobe couldn t port it without that? In that case it becomes much less clear on who is to blame for Photoshop not being available.

A lot of Wayland protocol work is focused on the environment and design, while applications and work to port them often is considered less. I think this happens because the overlap between application developers and developers of the desktop environments is not necessarily large, and the overlap with people willing to engage with Wayland upstream is even smaller. The combination of Windows developers porting apps to Linux and having involvement with toolkits or Wayland is pretty much nonexistent. So they have less of a voice.

I think while developing Wayland-as-an-ecosystem we are now entrenched into narrow concepts of how a desktop should work. While discussing Wayland protocol additions, a lot of concepts clash, people from different desktops with different design philosophies debate the merits of those over and over again never reaching any conclusion (just as you will never get an answer out of humans whether sushi or pizza is the clearly superior food, or whether CSD or SSD is better). Some people want to use Wayland as a vehicle to force applications to submit to their desktop s design philosophies, others prefer the smallest and leanest protocol possible, other developers want the most elegant behavior possible. To be clear, I think those are all very valid approaches.

But this also creates problems: By switching to Wayland compositors, we are already forcing a lot of porting work onto toolkit developers and application developers. This is annoying, but just work that has to be done. It becomes frustrating though if Wayland provides toolkits with absolutely no way to reach their goal in any reasonable way. For Nate s Photoshop analogy: Of course Linux does not break Photoshop, it is Adobe s responsibility to port it. But what if Linux was missing a crucial syscall that Photoshop needed for proper functionality and Adobe couldn t port it without that? In that case it becomes much less clear on who is to blame for Photoshop not being available.

A lot of Wayland protocol work is focused on the environment and design, while applications and work to port them often is considered less. I think this happens because the overlap between application developers and developers of the desktop environments is not necessarily large, and the overlap with people willing to engage with Wayland upstream is even smaller. The combination of Windows developers porting apps to Linux and having involvement with toolkits or Wayland is pretty much nonexistent. So they have less of a voice.

I will also bring my two protocol MRs to their conclusion for sure, because as application developers we need clarity on what the platform (either all desktops or even just a few) supports and will or will not support in future. And the only way to get something good done is by contribution and friendly discussion.

I will also bring my two protocol MRs to their conclusion for sure, because as application developers we need clarity on what the platform (either all desktops or even just a few) supports and will or will not support in future. And the only way to get something good done is by contribution and friendly discussion.

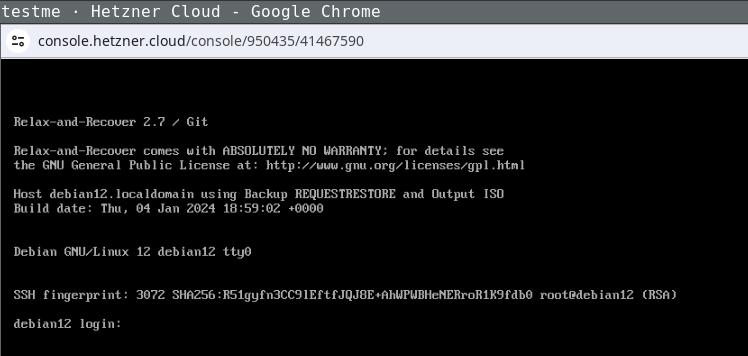

Now copy the ISO image to the newly created instance and extract

its data:

Now copy the ISO image to the newly created instance and extract

its data:

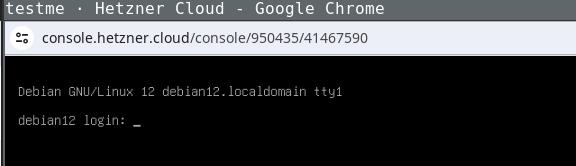

Login the recovery console (root without password) and fix its default route to

make it reachable:

Login the recovery console (root without password) and fix its default route to

make it reachable:

Being able to use this procedure for complete disaster recovery within Hetzner

cloud VPS (using off-site backups) gives me a better feeling, too.

Being able to use this procedure for complete disaster recovery within Hetzner

cloud VPS (using off-site backups) gives me a better feeling, too.

Libraries are collections of code that are intended to be usable by multiple consumers (if you're interested in the etymology, watch

Libraries are collections of code that are intended to be usable by multiple consumers (if you're interested in the etymology, watch